I am an HPE partner tasked with a 2 site, 4 x 8320 design/deployment for a I customer. Neither myself or HPE locally were involved in the sale so what we have ended up with isn't quite what we would have preferred. I have engaged HPE locally to answer the below question.

Short version is that we have 4 x 8320 and a customer who is expecting a lot of SFP+ ports in each site with cross-site layer 3 redundancy. There are 6 single mode pairs available for cross site connectivity.

I am already familiar with how to configure VSX in a single-site scenario using multi-chassis LAG (VSX LAG) to an edge switch, including the keepalive requirements, and I have read the 10.01 VSX guide multiple times. I have some questions around some specific scenarios we have discussed.

Scenario 1: Create 1 VSX per ste

- Is this a viable configuration (VRRP on one member in each VSX only)

- Are the links between set up as LAG or multichassis LAG? Will this need to be one LAG or two?

Scenario 2

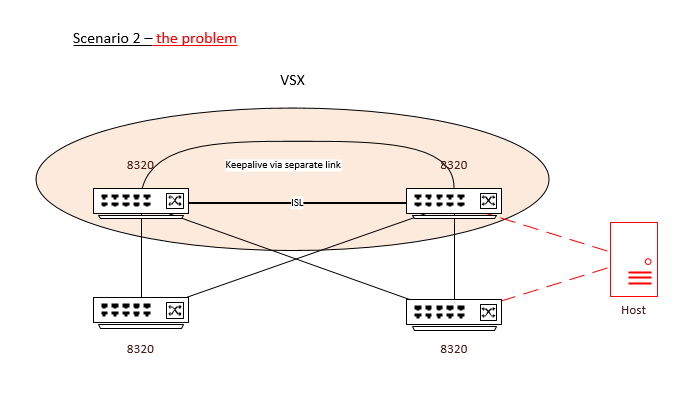

Scenario 2

In this scenario I would run a single cross-site VSX pair, and treat the other switches as edge devices only. This means I could use active gateway or VRRP for layer 3 HA...

... but ... I now have the issue that my hosts in each site need to be dual connected for path redundancy (there is not enough fiber to cross-connect between sites), and I would be connecting to one VSX member and one edge switch with my NIC teams. The hosts do not require 802.3ad/LACP. They will be vSphere hosts with "Route Based on Originating Virtual Port" configured on each of their NIC teams.

I think this one will work, but it it's a bit "clumsy" and possibly opens itself up to human error at some later stage when I'm not around to ensure the rules are followed.

Note that all switches cascaded from either site are single path only.

Any commentary on this one would be appreciated.

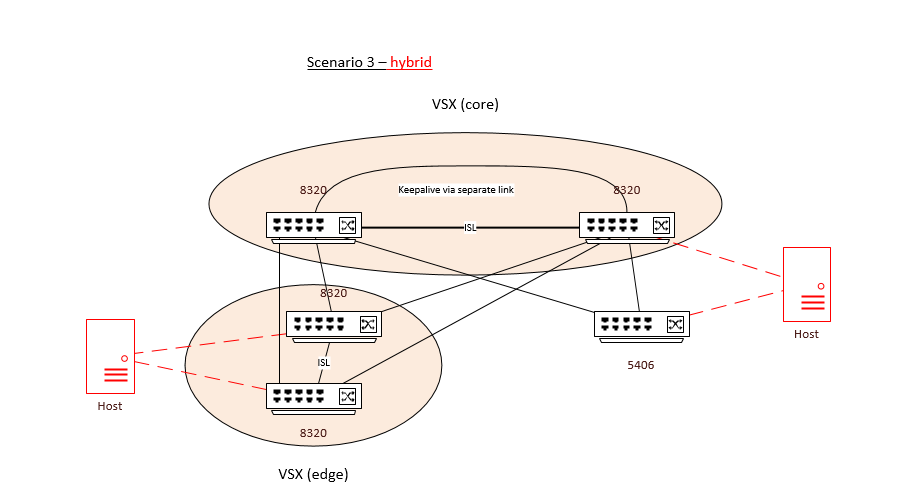

Scenario 3

This one is a bit of a hybrid of the two above. I might be able to reclaim some SFP+ ports on a 5406 edge switch in one of the two sites. This would let me solve the host pathing problem at one site, while still having it at the other. Site 2 is for backup and DR, so if necessary we can consider running network teams as active/standby on this site.

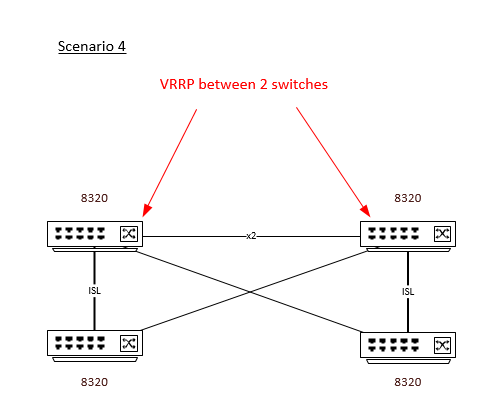

Scenario 4

Failback scenario is STP + VRRP something like this. I know how to do this - but it's the last resort... and in general would be a disappointing result given the technology available.